Yanchao Yang

Assistant ProfessorElectrical and Computer Engineering and the Institute of Data Science

The University of Hong Kong

Email: yanchaoy at hku dot hk

Office: Room 714, Chow Yei Ching Building, HKU

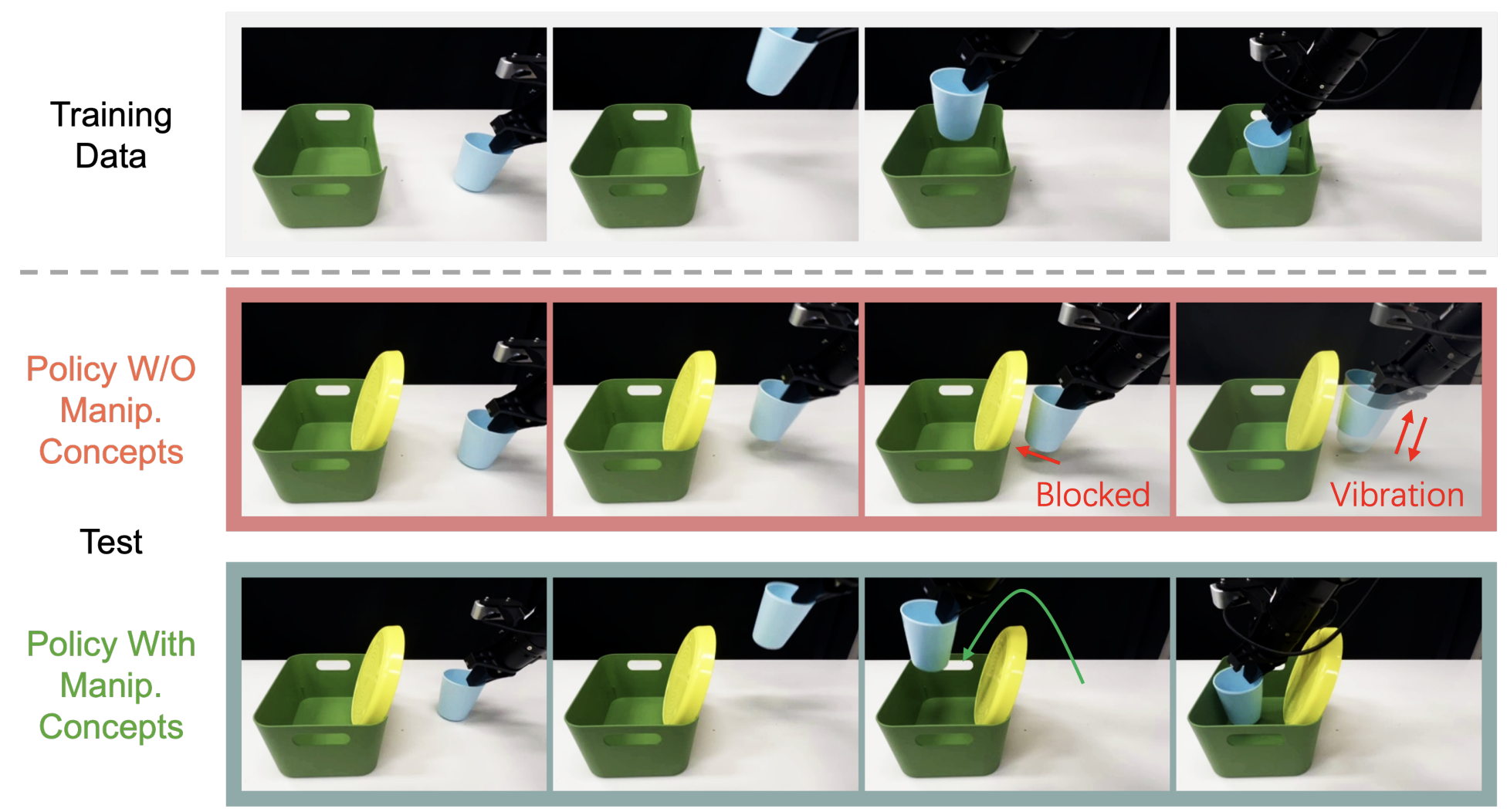

HiMaCon: Discovering Hierarchical Manipulation Concepts from Unlabeled Multi-Modal Data

Ruizhe Liu, Pei Zhou, Qian Luo, Li Sun, Jun Cen, Yibing Song, Yanchao Yang

NeurIPS 2025

arXiv/code/project page

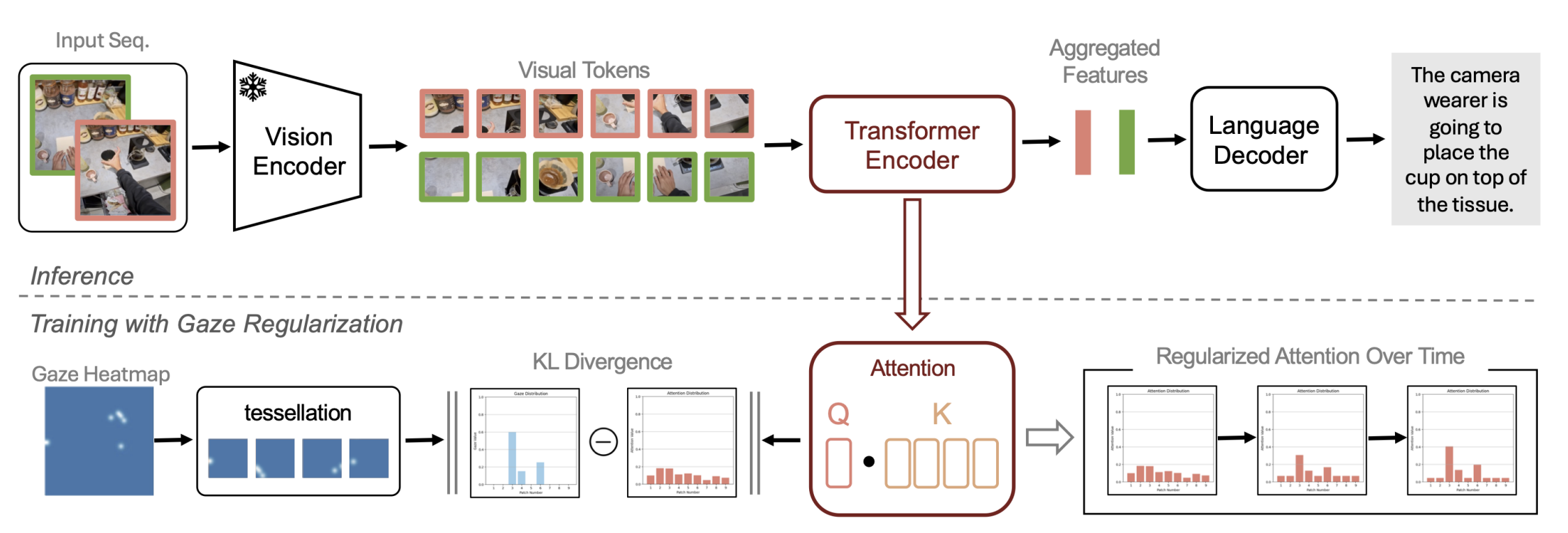

Gaze-VLM: Bridging Gaze and VLMs via Attention Regularization for Egocentric Understanding

Anupam Pani, Yanchao Yang

NeurIPS 2025

arXiv/code/project page

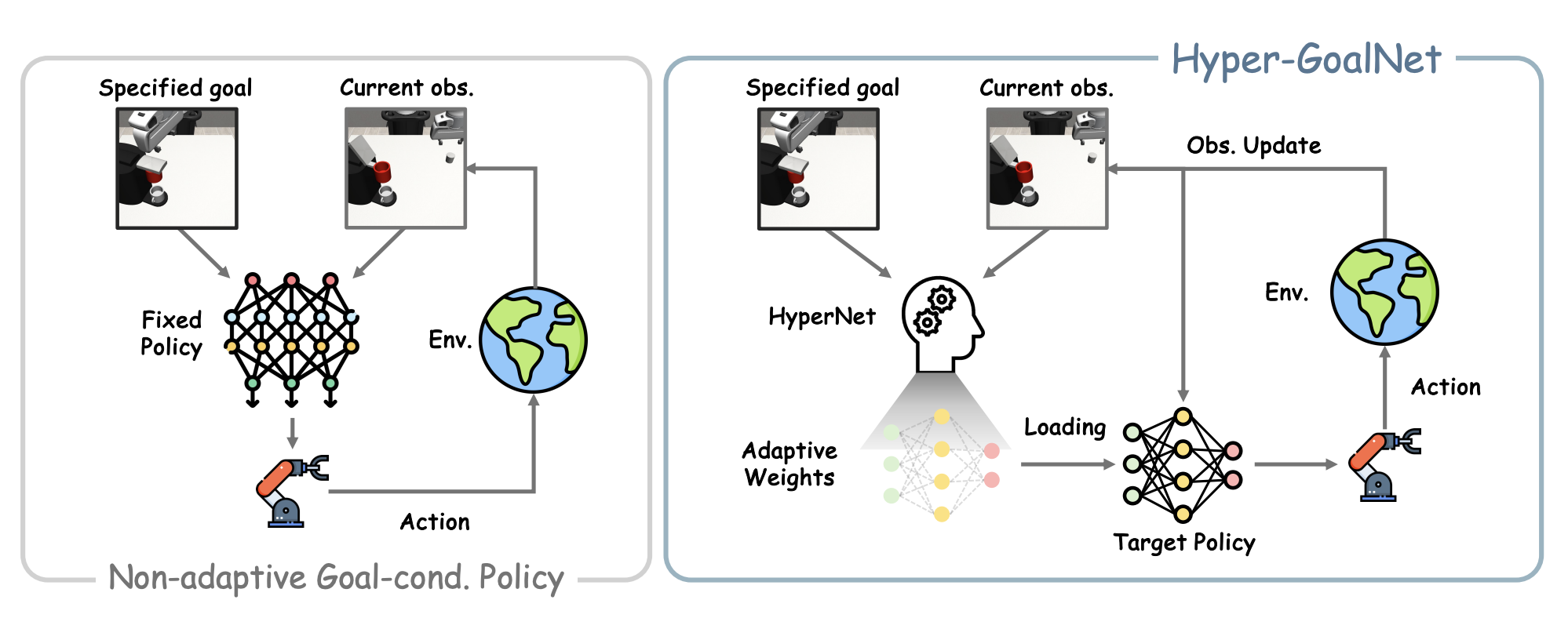

Hyper-GoalNet: Goal-Conditioned Manipulation Policy Learning with HyperNetworks

Pei Zhou, Wanting Yao, Qian Luo, Xunzhe Zhou, Yanchao Yang

NeurIPS 2025

arXiv/code/project page

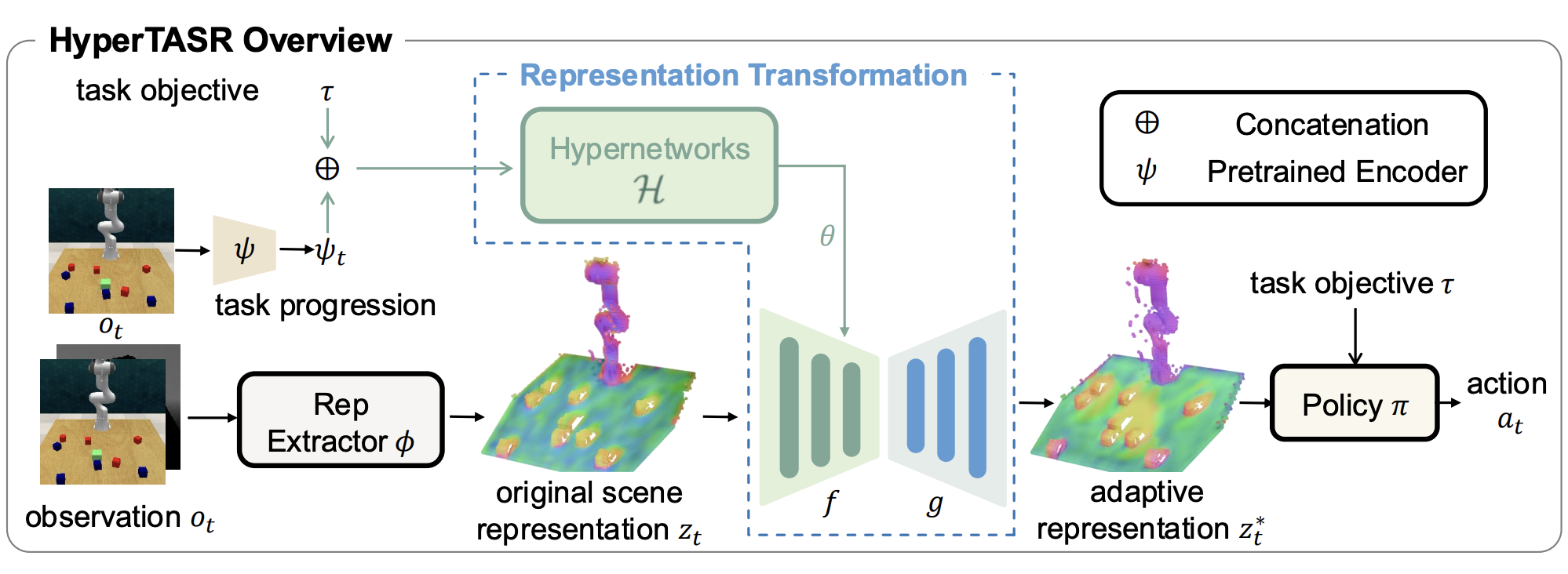

HyperTASR: Hypernetwork-Driven Task-Aware Scene Representations for Robust Manipulation

Li Sun*, Jiefeng Wu*, Feng Chen, Ruizhe Liu, Yanchao Yang

CoRL 2025

arXiv/code/project page

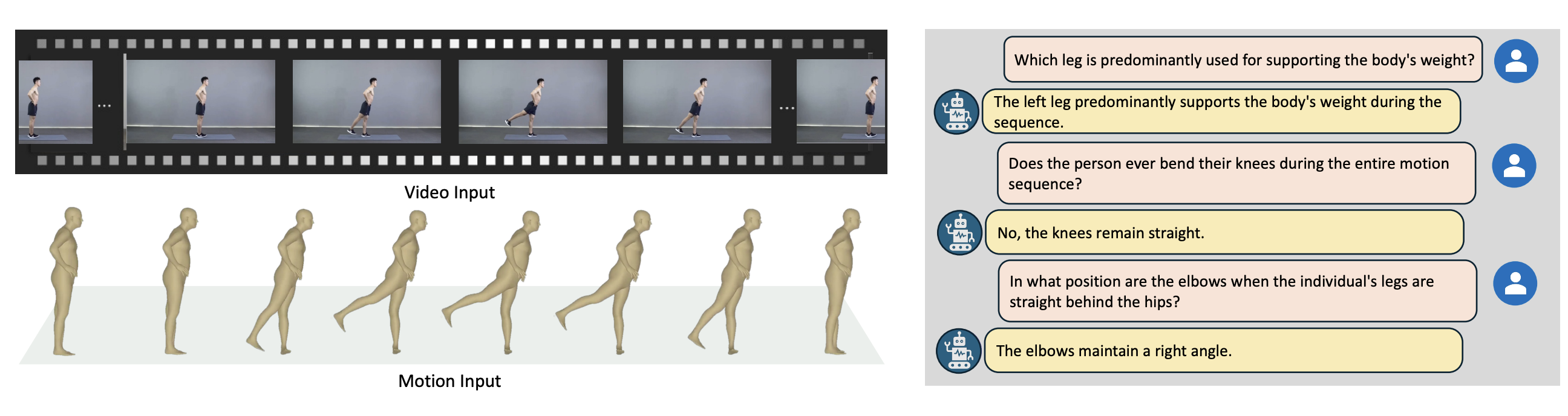

HuMoCon: Concept Discovery for Human Motion Understanding

Qihang Fang, Chengcheng Tang, Bugra Tekin, Shugao Ma, Yanchao Yang

CVPR 2025

arXiv/code/project page

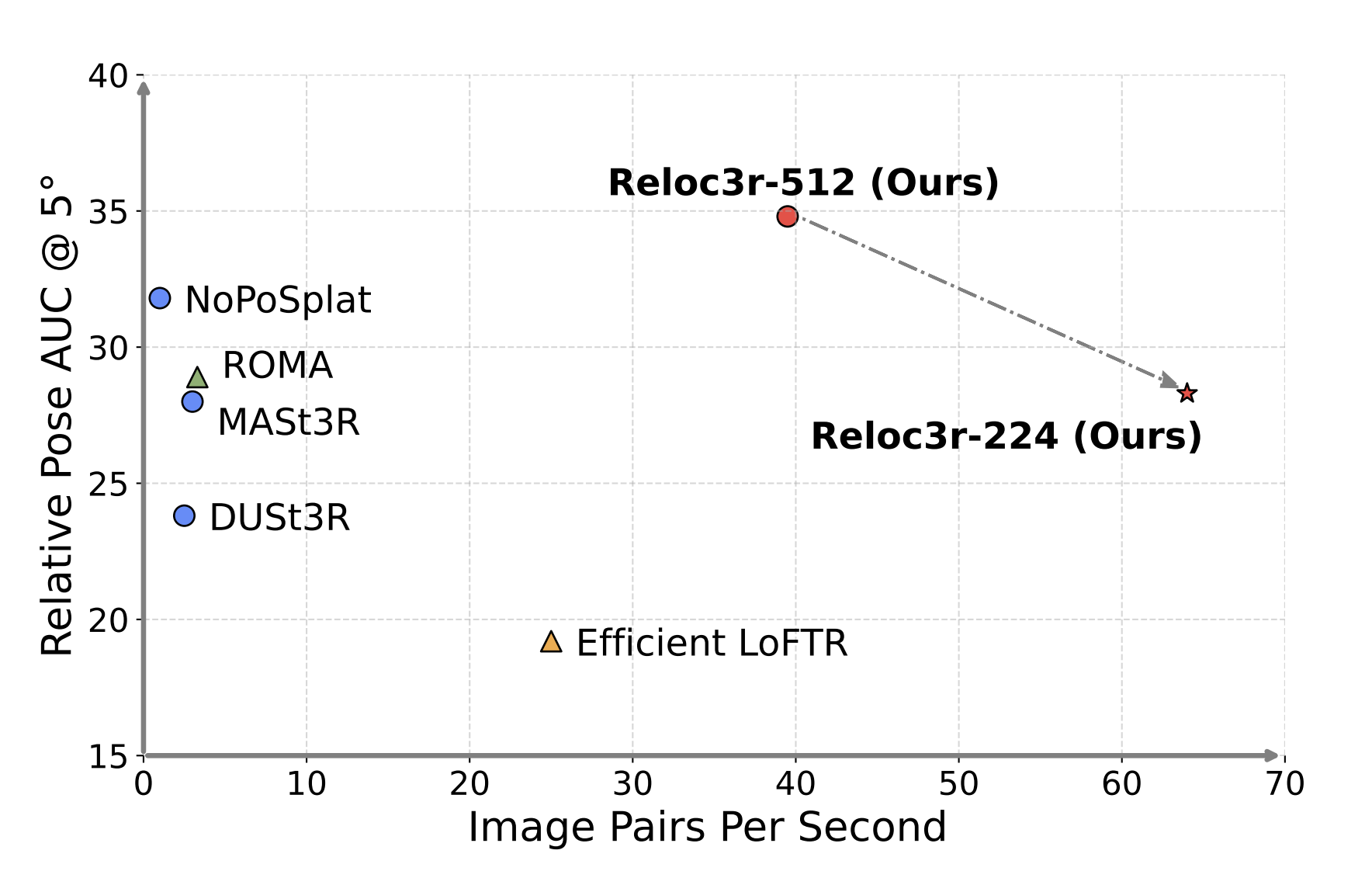

Reloc3r: Large-Scale Training of Relative Camera Pose Regression for Generalizable, Fast, and Accurate Visual Localization

Siyan Dong*, Shuzhe Wang*, Shaohui Liu, Lulu Cai, Qingnan Fan, Juho Kannala, Yanchao Yang

CVPR 2025

arXiv/code/project page

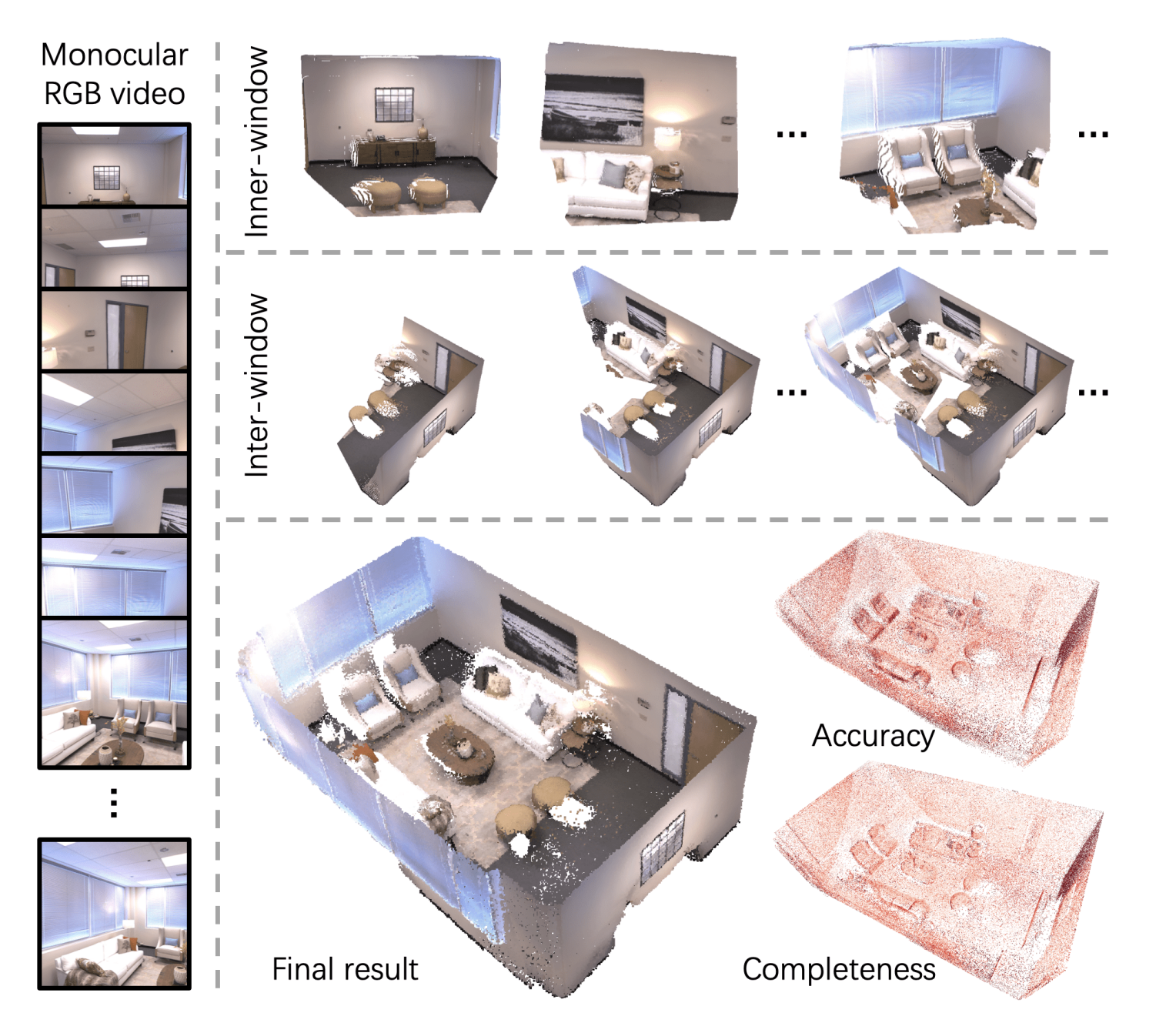

SLAM3R: Real-Time Dense Scene Reconstruction from Monocular RGB Videos

Yuzheng Liu*, Siyan Dong*, Shuzhe Wang, Yingda Yin, Yanchao Yang, Qingnan Fan, Baoquan Chen

CVPR 2025, Spotlight

arXiv/code/project page

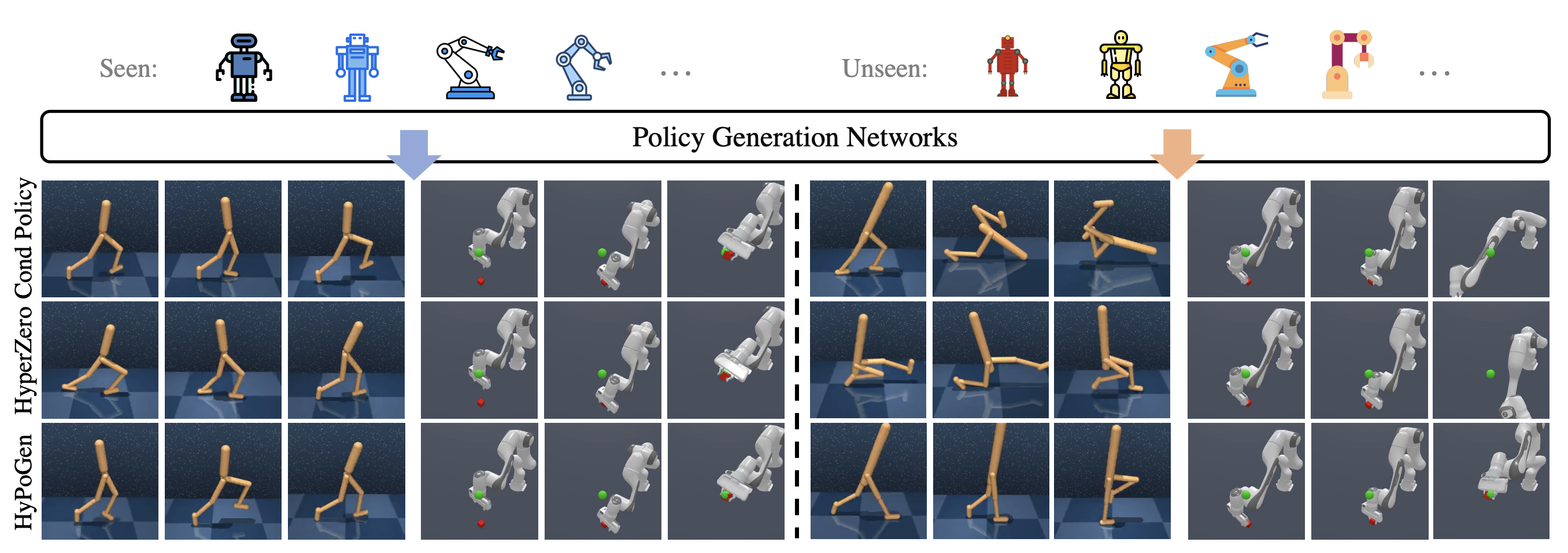

HyPoGen: Optimization-Biased Hypernetworks for Generalizable Policy Generation

Hanxiang Ren*, Li Sun*, Xulong Wang, Pei Zhou, Zewen Wu, Siyan Dong, Difan Zou, Youyi Zheng, Yanchao Yang

ICLR 2025

arXiv/code/project page

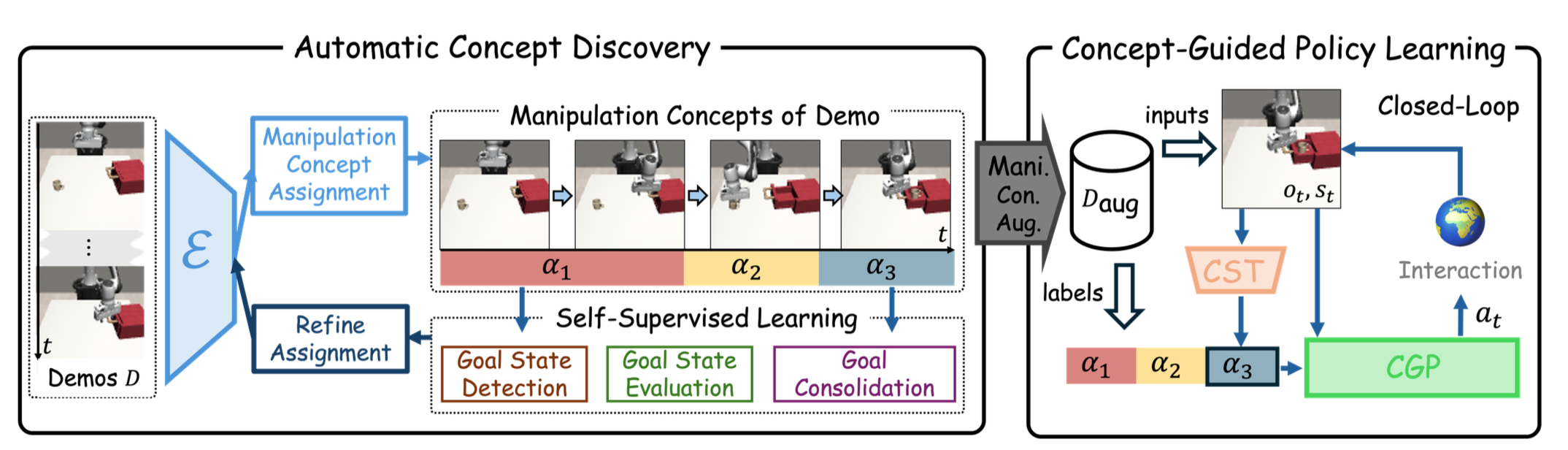

AutoCGP: Closed-Loop Concept-Guided Policies from Unlabeled Demonstrations

Pei Zhou*, Ruizhe Liu*, Qian Luo*, Fan Wang, Yibing Song, Yanchao Yang

ICLR 2025, Spotlight

arXiv/code/project page

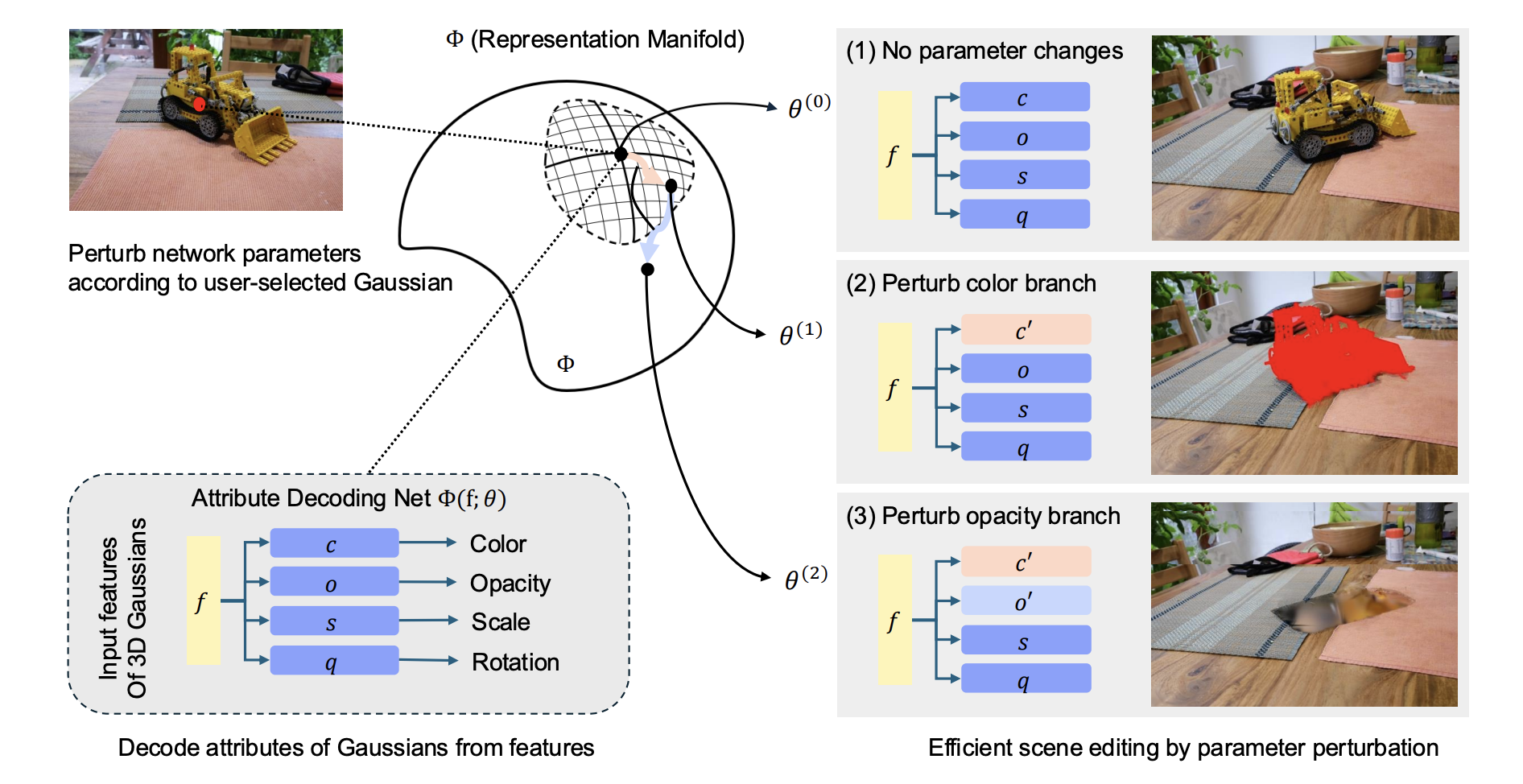

InfoGS: Efficient Structure-Aware 3D Gaussians via Lightweight Information Shaping

Yunchao Zhang, Guandao Yang, Leonidas Guibas, Yanchao Yang

ICLR 2025

arXiv/code/project page